| WRF (v4.4) | MPAS-A (v7.3) | CPAS (v1.1)  |

|

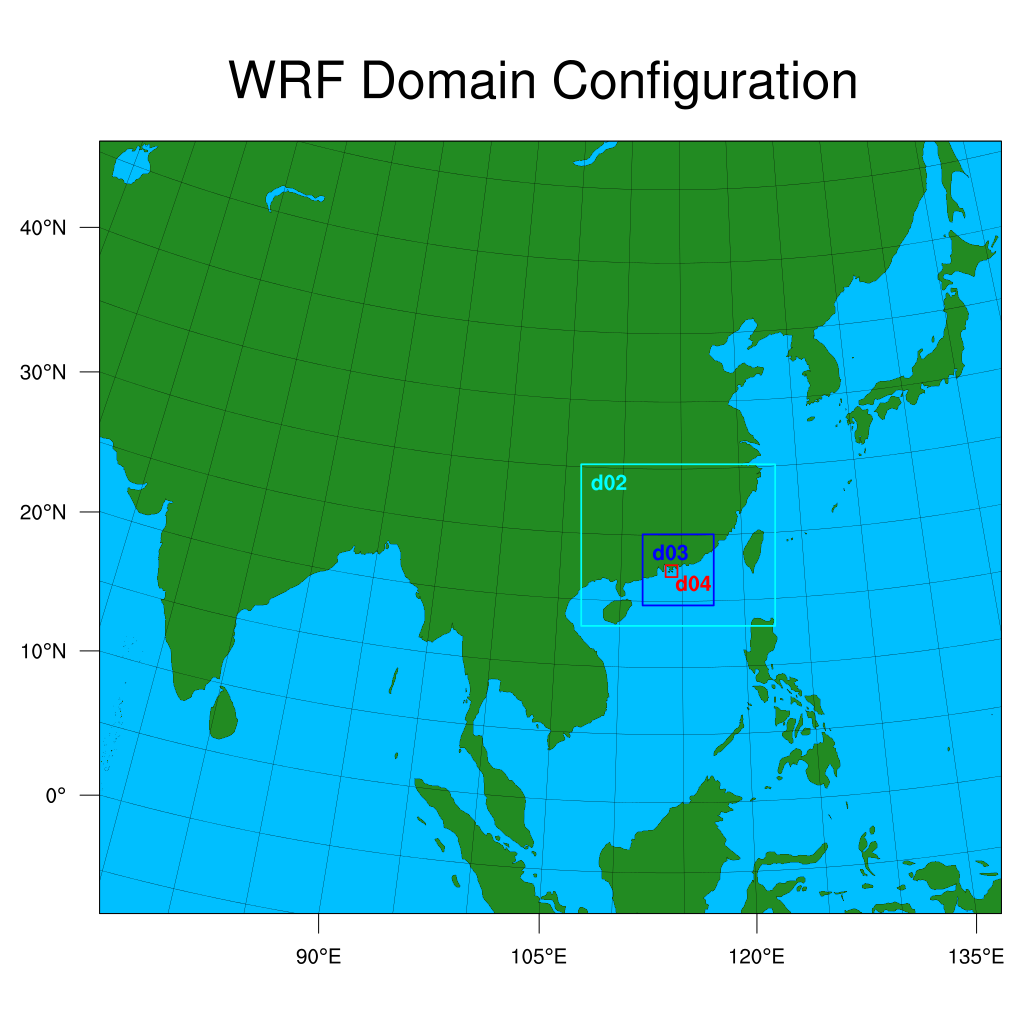

| Obtaining/defining regional high-resolution grid |    Follow WRF’s best practices to define nested domains. Follow WRF’s best practices to define nested domains. |

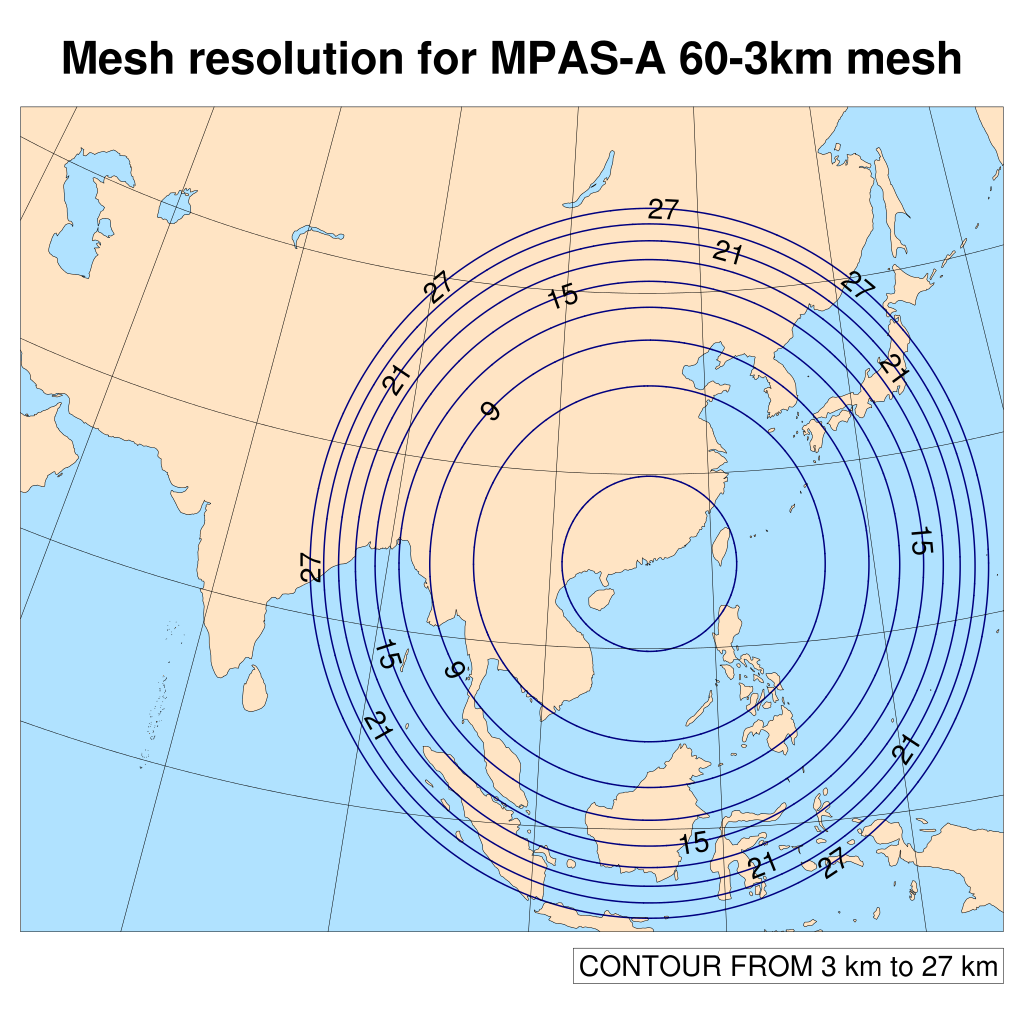

Download available standard meshes from official site. Download available standard meshes from official site. |

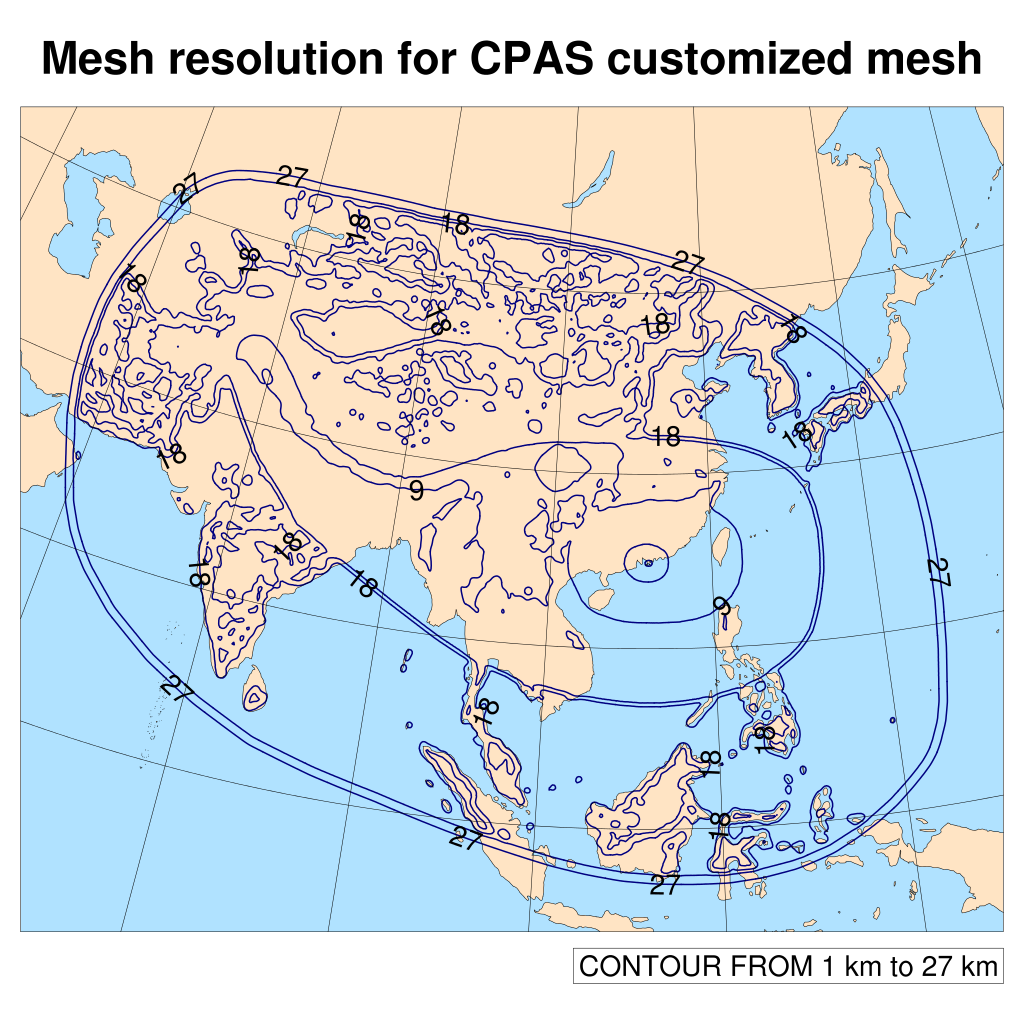

Specify your regions and corresponding target resolutions via cloud-based service platform. Specify your regions and corresponding target resolutions via cloud-based service platform. |

| Shape of region |

Rectangular Rectangular |

Circular or elliptical (in all available meshes.) Circular or elliptical (in all available meshes.) |

Arbitrarily shaped Arbitrarily shaped |

| Resolution variability |

Example: 27km - 9km - 3km - 1km |

Example: 60km - 3km mesh |

Example: 100km - 200m |

| Automatic resolution boost for orography |    nil nil |

nil nil |

Supported! Supported! |

| Automatic resolution boost for coastline |    nil nil |

nil nil |

Supported! Supported! |

| Population of geographic data |

Example: topo_10m, topo_gmted2010_5m, topo_2m, topo_gmted2020_30s |

Example: topo_30, topo_gmted2020_30s |

Example: topo_10m, topo_5m, topo_2m, topo_30s, topo_15s, topo_3s, |

| Analyzing simulation error in resolution jump / transition |    N/A N/A |

N/A N/A |

Shallow water wave test and plots of error can be generated by the platform(Experimental) Shallow water wave test and plots of error can be generated by the platform(Experimental) |

| WRF (v4.4) | MPAS-A (v7.3) | CPAS (v1.0)  |

|

| Model type |    Limited-area model, targeting for high-resolution simulation within the domain. Limited-area model, targeting for high-resolution simulation within the domain. |

Global model with moderate regional resolution refinement. Global model with moderate regional resolution refinement. |

Global model with arbitrary resolution refinement, including extreme resolution refinement for small region. Global model with arbitrary resolution refinement, including extreme resolution refinement for small region. |

| Forecast accuracy and time horizon |    Very accurate within the high-resolution region up to about 3 days. Very accurate within the high-resolution region up to about 3 days. |

Generally accurate for medium-range time horizon up to about 9 days. Generally accurate for medium-range time horizon up to about 9 days. |

Very accurate within the high-resolution region and generally accurate for medium-range time horizon, up to about 9 days with an appropriately designed mesh. Very accurate within the high-resolution region and generally accurate for medium-range time horizon, up to about 9 days with an appropriately designed mesh. |

| Time stepping |    Each domain uses its own time step size specified in WRF namelist. Each domain uses its own time step size specified in WRF namelist. |

(This time step size is limited by the smallest cell in the entire mesh. This is required to meet the stability condition for time integration. The presence of a small cell in the mesh makes the computational resource requirement prohibitively large. ) |

(This not only reduces the required computational resources, but also enables the use of extreme variable resolution on a global mesh.) |

| Hierarchical time-stepping (HTS) report |    N/A N/A |

N/A N/A |

Available after mesh generation, showing regions of time stepping levels and resource utilization. Available after mesh generation, showing regions of time stepping levels and resource utilization. |

| Parallelization and load balancing |    The computation of each domain is parallelized while the execution sequence of nested domains is serial. The computation of each domain is parallelized while the execution sequence of nested domains is serial. |

The mesh of the whole globe is fully partitioned and the parallelization is excellent. The mesh of the whole globe is fully partitioned and the parallelization is excellent. |

The mesh of the whole globe is fully partitioned with consideration of time-stepping. The mesh of the whole globe is fully partitioned with consideration of time-stepping. |

| Scalability |    Scalability limited by the domain with smallest number of grid cells. Scalability limited by the domain with smallest number of grid cells. |

Extremely scalable. Extremely scalable. |

Very scalable. Very scalable. |

| Scale-awareness of physics model |    Different domains may use different physics models. Different domains may use different physics models. |

No scale-awareness in convection scheme except Grell-Freitas scheme. No scale-awareness in convection scheme except Grell-Freitas scheme. |

Automatically switch off cumulus parameterization for small cells. Automatically switch off cumulus parameterization for small cells. |

| Handling wave reflection in resolution jump / transition. |    Handled by specified zone and relaxation zone near lateral boundaries for the resolution jump. Handled by specified zone and relaxation zone near lateral boundaries for the resolution jump. |

Handled by filtering, with default / specified coefficients for horizontal diffusion, for gradual resolution transition. Handled by filtering, with default / specified coefficients for horizontal diffusion, for gradual resolution transition. |

The mesh generation algorithm creates customized mesh with smooth resolution transition that can be handled by filtering with default coefficients for horizontal diffusion. The mesh generation algorithm creates customized mesh with smooth resolution transition that can be handled by filtering with default coefficients for horizontal diffusion. |

| Model inconsistency between regional and the global driving model |    Different parameterization in physics models, especially those related to moisture, between WRF and the driving global model results in artifacts and spurious effects propagating from the lateral boundaries into the region of interest. Different parameterization in physics models, especially those related to moisture, between WRF and the driving global model results in artifacts and spurious effects propagating from the lateral boundaries into the region of interest. |

No such problem. No such problem. |

No such problem. No such problem. |

| Four-dimensional data assimilation |    Supported Supported |

nil nil |

Supported, with scaled FDDA option for variable-resolution mesh. Supported, with scaled FDDA option for variable-resolution mesh. |